User-Provisioned Infrastructure for OpenShift with orcharhino

Recently, a participant of a Kubernetes training asked me whether this OpenShift can be set up with orcharhino. He had been an orcharhino user for some time and was familiar with its automation methods, Kubernetes and OpenShift in particular were still relatively new to him. “Yes.”, I replied, but then had to pause, brooding and silent. We’d done this before, but this was OpenShift 3.

In fact, installing OpenShift, RedHat’s Kubernetes distribution changed quite fundamentally with the major version jump. But on closer inspection, yes, all the basics were there for the new method in orcharhino as well, maybe even more. I revised my answer to a “Yes, well, should work.” In fact, it goes very well, as I can say in the meantime.

OpenShift 3 used RHEL servers and used Ansible to install and configure all the services for Kubernetes on top of them. Once this basic framework was in place, the customizations happened that turn Kubernetes into OpenShift – roughly speaking. The orcharhino fit into this model by providing lifecycle management for the hosts and acting as a registry mirror when needed – such as when you wanted to deploy your Kubernetes in environments without Internet access.

OpenShift 4 throws RHEL and Ansible out of this procedure and replaces it with CoreOS with MachineConfig/Ignition. Regardless of whether that’s good or bad, it’s such a sweeping change that nothing you remember from installing version 3 has any meaning anymore.

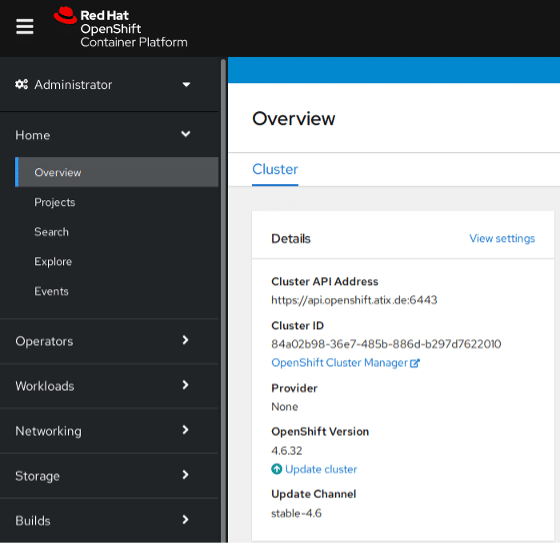

Nowadays, to install, you first start a bootstrap node. The master nodes, the later control plane, start next and get their information from the bootstrap node. They form an etcd cluster, which in turn is filled by the bootstrap node, and gradually all the essential services for OpenShift start. At a certain point, the master nodes are fully equipped, the bootstrap node is no longer needed. Now it is time to start the worker nodes, which get their information from the master nodes. This then takes a few more minutes until you have a full OpenShift installation in front of you.

RedHat allows installation in two ways: Users contribute the infrastructure themselves or the installer takes care of the resources. In the second variant, the infrastructure team should have access to the company credit card and be allowed to book themselves cloud instances as they wish. It is seductively uncomplicated, but of course limited: the variant works with hyperscalers, with VMware vSphere, RHV and certain IBM products.

Self-provisioning, called User Provisioned Infrastructure (UPI), is where the orcharhino comes in helpfully. To do this, you need to understand how CoreOS installs. It uses a file system image for this, which can only be minimally customized. This customization is done via Ignition, which takes on roughly the same role as Anaconda/Kickstart for CentOS and RHEL. The templates for the Ignition files can be provided and customized by the orcharhino, for example to tell a host that it takes the role of the bootstrap node and for which cluster it does so. The complete installation of the CoreOS nodes can thus be triggered by the orcharhino.

Within the network of CoreOS hosts, the installation of OpenShift then happens. With direct access to the Internet, everything else works without the support of the orcharhino. In airgapped scenarios, i.e. without direct access to the Internet, the required container images must be stored on the local network. As with OpenShift 3, orcharhino can take care of this, i.e. act as a local container registry.

All these steps can be automated via the Foreman Ansible Modules. A relatively short playbook thus allows to install an OpenShift cluster with one command and takes about ten minutes.

More details can be found in my talk at OSAD 2021 on 6.10.

Jan Bundesmann

Latest posts by Jan Bundesmann (see all)

- ATIX at ConfigMgmtCamp 2024 - 20. February 2024

- Switching from Docker to CRI-O - 15. November 2023

- Registers and Macros in Vim - 23. June 2023