When asked what exactly logging is, we have come across all kinds of definitions and negative examples. Unfortunately, it is still far too common for people not to use logging at all in an application, or to only issue a log with the entire stack trace in the event of an error. Another (bad) way to log is to set a log at all places where anything has ever happened and not to define log levels.

We would define logging as follows:

Logging means that you keep track of processes in the program flow. Depending on the environment and the importance of the log, different indicators (log levels) and information are provided in the log. The goal should be that the log provides added value and does not overload the user with information.

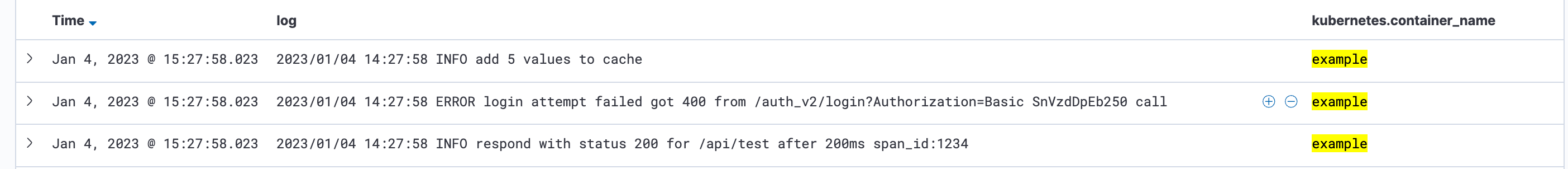

A log that does not provide any significant added value might look like this:

2023/01/04 15:08:39 INFO respond successful

The only information we can extract from this log is that a response to an API call was successfully submitted.

The same log, including details that give us added value, could look like this:

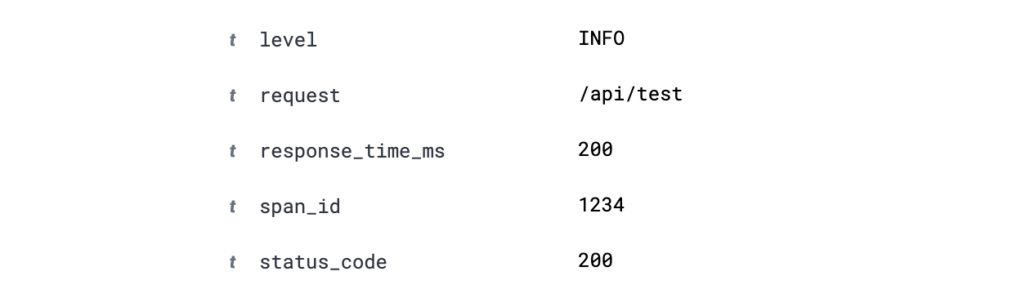

2023/01/04 15:08:39 INFO respond with status 200 for /api/test after 200ms span_id:1234

Here we can find information regarding the status code, the URL called up, the time it took our application to respond, and a span ID that we can use to analyze our API call with tracing. If you want to learn more about tracing, then take a look at this blog post.