Kubeflow at Home: AI and Kubernetes outside of Google & Co.

There are always new hot trends in the IT world: yesterday it was all about AI, ML and Data Science – today everything is running in containers. It’s no wonder the two worlds are coming together: it is now possible to get Tensorflow-based AI environments running in a Kubernetes cluster. Possible, but not necessarily easy, as we learned.

A client wanted to have support for advanced data processing and scientific research in a dedicated cluster: they had chosen Kubeflow and asked for our help with the installation.

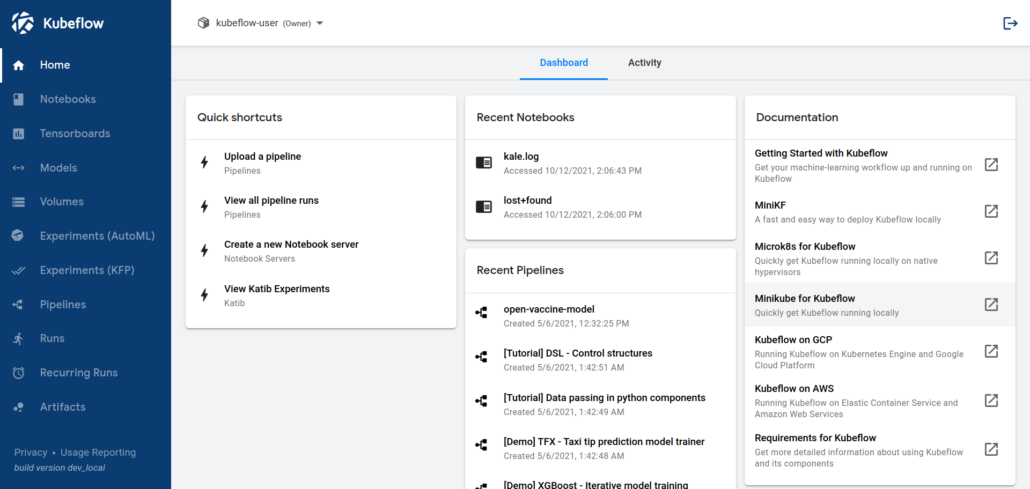

What is Kubeflow anyway? As the name suggests, it is a platform (actually several interconnected applications) that makes data processing and AI (like Tensorflow, Jupyter Notebooks, etc) easily deployable in Kubernetes clusters. It is developed open source by Google and made available to the public. There are even variants for installation – if these environments are running at large cloud providers and not in the company’s own data centers.

[Image: https://www.Kubeflow.org/docs/images/central-ui.png ]

Unfortunately, the quantity and quality of the available documentation doesn’t help much: how to get Kubeflow working with one of the major cloud providers is simple: click here, run that command: done. But otherwise? Pretty few starting points. On the one hand understandable: Google&Co would probably like it if the application was used on their infrastructure. But: KubeFlow is open source, so it should run independently from the substructure!

The Kubeflow website now also provides other instructions on how to successfully deploy the entire platform. But these are also very specific, usually for a particular version and often from one operator for their own platform:

| Name | Maintainer | Platform | Version |

| Kubeflow on AWS | Amazon Web Services (AWS) | Amazon Elastic Kubernetes Service (EKS) | 1.2 |

| Kubeflow on Azure | Microsoft Azure | Azure Kubernetes Service (AKS) | 1.2 |

| Kubeflow on Google Cloud | Google Cloud | Google Kubernetes Engine (GKE) | 1.4 |

| Kubeflow on IBM Cloud | IBM Cloud | IBM Cloud Kubernetes Service (IKS) | 1.4 |

| Kubeflow on Nutanix | Nutanix | Nutanix Karbon | 1.4 |

| Kubeflow on OpenShift | Red Hat | OpenShift | 1.3 |

| Argoflow | Argoflow Community | Conformant Kubernetes | 1.3 |

| Arrikto MiniKF | Arrikto | AWS Marketplace, GCP Marketplace, Vagrant | 1.3 |

| Arrikto Enterprise Kubeflow | Arrikto | EKS, AKS, GKE | 1.4 |

| Kubeflow Charmed Operators | Canonical | Conformant Kubernetes | 1.3 |

| MicroK8s Kubeflow Add-on | Canonical | MicroK8s | 1.3 |

(Source: https://www.kubeflow.org/docs/started/installing-kubeflow/)

In Hungarian there is an appropriate expression for this: “sewing the jacket to the button”. So here: if we want to install Kubeflow, it is best to choose a suitable platform.

However, the platform is already provided by our customer: RKE2 from SUSE/Rancher. We have been servicing this setup for a while now, and so far it has all worked out to our satisfaction. All clusters at the customer’s site use RKE2. And we want to keep our clusters consistent. And that doesn’t show up in the list.

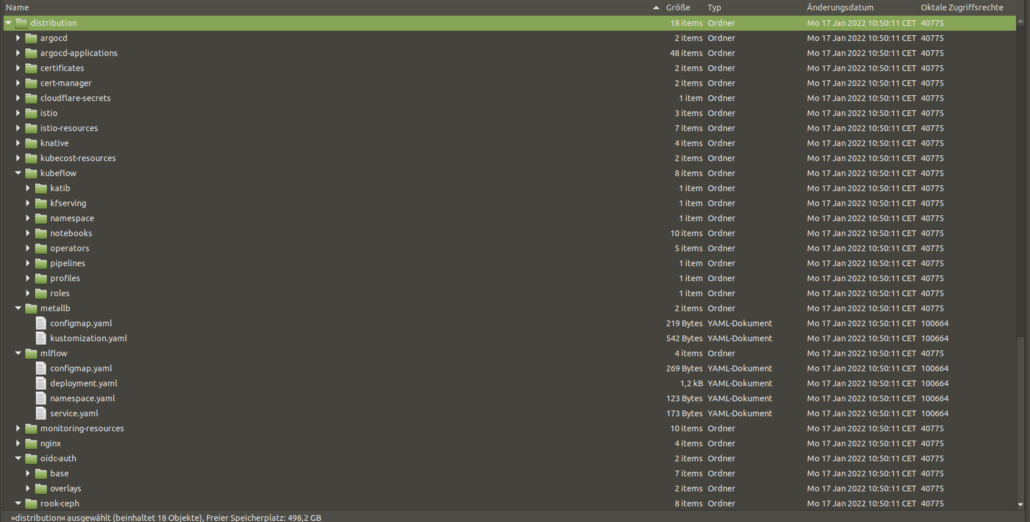

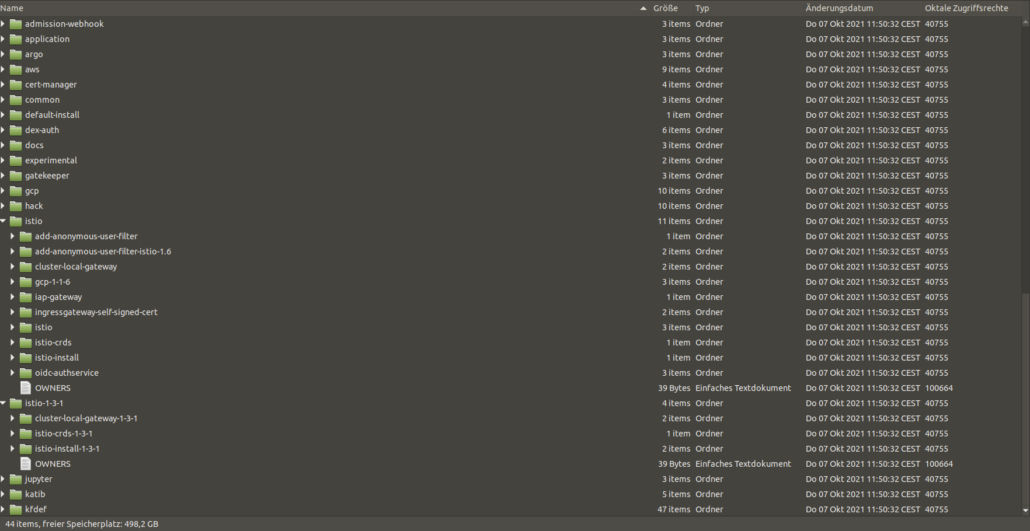

The solution closest to our goals is Argoflow: an installation option with ArgoCD, a popular CI/CD assistant in the Kubernetes environment. However, this solution also focuses more on the familiar cloud environments, and uses Kustomize for the most part. On one hand, this is good: you can use it to fine-tune which resources are created and how they behave. On the other hand, this assumes that you already know how the landscape is built, what all is needed, and what all you need to set up to achieve the desired goal. We remember the volume and quality of documentation again – not good equipment to begin with.

Definitely worth mentioning are a helpful article at Heise (https://www.heise.de/hintergrund/Machine-Learning-Kubeflow-richtig-einrichten-ein-Tutorial-6018408.html) and a public Git repo (https://github.com/SoeldnerConsult/kubeflow-in-tkg-cluster) by Söldner Consult GmbH. Some useful insights came out of that, but again, it doesn’t hit our target. The whole logic is adapted to VMWare Tanzu.

So despite these valuable insights, we had to do more research. We were certainly not the first to have this problem, someone must have already solved it.

And in fact, there is a method that makes installation convenient and easy, in different environments and for different scenarios, and that sets up and connects all the necessary components in a meaningful way. kfctl – kf for Kubeflow, ctl for Control and very popular in the Kubernetes environment – it was originally developed together with Kubeflow.

However, the project has been discontinued and the logic has changed somewhat since the last documented state. Nevertheless, this path ultimately led to the goal. What is especially important in our case: kfctl offers the possibility to set up Istio and Dex as well.

Istio and Kubeflow

Istio is a service mesh for Kubernetes: it handles network management for the pods where it is enabled. But it is also quite resource hungry: if you want to use it, it injects a sidecar container in all the affected pods – which is only there to control the network communication of each pod and to control and manipulate the network traffic between the main containers and all other resources.

What is it for anyway? A question that is not easy to answer. However, without Istio, Kuberflow will not work as expected. Which Istio resources are created, which services are connected via Istio and how: not really documented.

Dex and Kubeflow

The situation is similar with Dex. This is a Kubernetes application for authentication and authorization against an external service. Logical: if the processing involves sensitive data, you want to regulate who may have access to it. And data is always sensitive, whether credit card numbers, email addresses or health data.

Dex enables all this: it connects to an LDAP server, and checks whether users who log in are allowed to do so. At which point Kubeflow needs it, for which services, how it should be wired with Istio if necessary, if at all – all this is again not revealed in the documentation.

But: With kfctl this is not important either. A few simple commands and we have all the necessary resources, which also communicate with each other as desired.

benutzer@maschine:~/kfctl$ ./kfctl --help

A client CLI to create kubeflow applications for specific platforms or 'on-prem'

to an existing k8s cluster.

Usage:

kfctl [command]

Available Commands:

alpha Alpha kfctl features.

apply deploys a kubeflow application.

build Builds a KF App from a config file

completion Generate shell completions

delete Delete a kubeflow application.

generate 'kfctl generate' has been replaced by 'kfctl build'

Please switch to new semantics.

To build a KFAPP run -> kfctl build -f ${CONFIG}

Then to install -> kfctl apply

For more information, run 'kfctl build -h' or read the docs at www.kubeflow.org.

help Help about any command

init 'kfctl init' has been removed.

Please switch to new semantics.

To install run -> kfctl apply -f ${CONFIG}

For more information, run 'kfctl apply -h' or read the docs at www.kubeflow.org.

version Print the version of kfctl.

Flags:

-h, --help help for kfctl

Use "kfctl [command] --help" for more information about a command.

benutzer@maschine:~/kfctl$ ./kfctl build --help

Builds a KF App from a config file

Usage:

kfctl build [flags]

Flags:

-d, --dump dump manifests to stdout, default is false

-f, --file string Static config file to use. Can be either a local path:

export CONFIG=./kfctl_gcp_iap.yaml

or a URL:

export CONFIG=https://raw.githubusercontent.com/kubeflow/manifests/v1.0-branch/kfdef/kfctl_gcp_iap.v1.0.0.yaml

export CONFIG=https://raw.githubusercontent.com/kubeflow/manifests/v1.2-branch/kfdef/kfctl_istio_dex.v1.2.0.yaml

export CONFIG=https://raw.githubusercontent.com/kubeflow/manifests/v1.2-branch/kfdef/kfctl_aws.v1.2.0.yaml

export CONFIG=https://raw.githubusercontent.com/kubeflow/manifests/v1.2-branch/kfdef/kfctl_k8s_istio.v1.2.0.yaml

kfctl build -V --file=${CONFIG}

-h, --help help for build

-V, --verbose verbose output default is false

The KubeFlow project provides configuration files for kfctl that can be read directly. If kubectl is configured correctly and points to the right cluster, the following command does all the heavy lifting:

export CONFIG=https://raw.githubusercontent.com/kubeflow/manifests/v1.2-branch/kfdef/kfctl_istio_dex.v1.2.0.yaml

kfctl build -V –file=${CONFIG}

This creates all resource manifests and ensures that they are deployed. The procedure is based on Kustomize, so the sum of all files created is a bit misleading, but still interesting as info: this command creates more than 7000 files in more than 1100 directories. Maybe this explains why there is no detailed documentation to be found – and why it is not so easy to do the deployment with a simple kubectl apply.

If one follows the changes in Kubeflow carefully, these manifests can also be used in the future: with appropriately careful modifications to the kustomize files, which serve to generate the other manifests (see the 7000+ above), changes can be incorporated. Provided that the remote source logic used here remains consistent.

And what happens next?

So Kfctl was the way to solve our problems. But the development was stopped, there are no new versions. In future you have to decide for a different solution. And we are on it: we are investigating the solutions offered by Kubeflow as default to see to what extent they can be used in updates, how clear they are, how easy they are to use. And when we have answers, we’ll get back to you with those.

Gergely Szalay arbeitet als IT Consultant, Schwerpunkt Kubernetes. Er verfügt über langjährige Erfahrungen im Application Support.