Ansible Automation Platform

In this article, we introduce the Ansible Automation Platform, explain its architecture, and show how it efficiently addresses complex automation requirements in large-scale environments.

Why companies need the Ansible Automation Platform

Ansible is known to be one of the most widely used automation tools. This is certainly also due to the relatively simple setup and use. Especially in very large environments, i.e. with a large number of users, a pure CLI setup quickly reaches its limits: Multi-tenancy, credential management, scheduling are just some of the points that require a very close look.

Possible solutions come in different sizes and levels of complexity: From pure CI/CD approaches with Jenkins GitLab CI/CD, to integrated management in Foreman variants, such as orcharhino, to the Automation Platform, RedHat’s own solution.

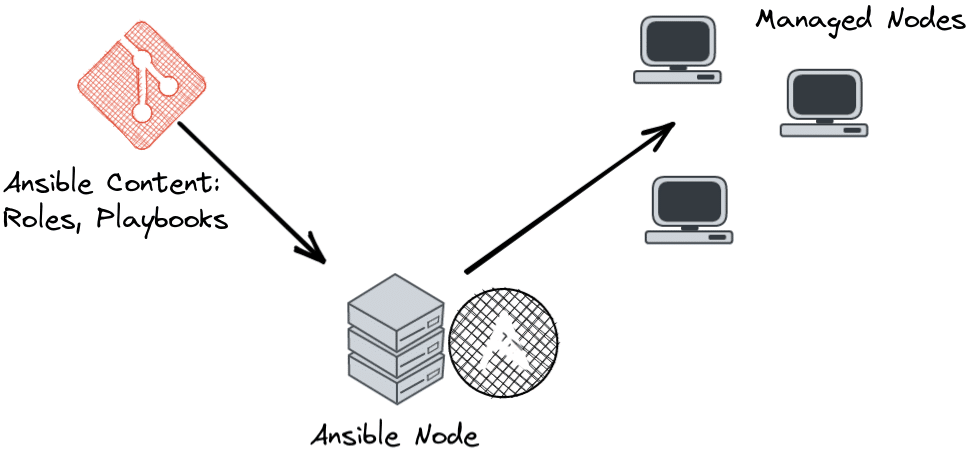

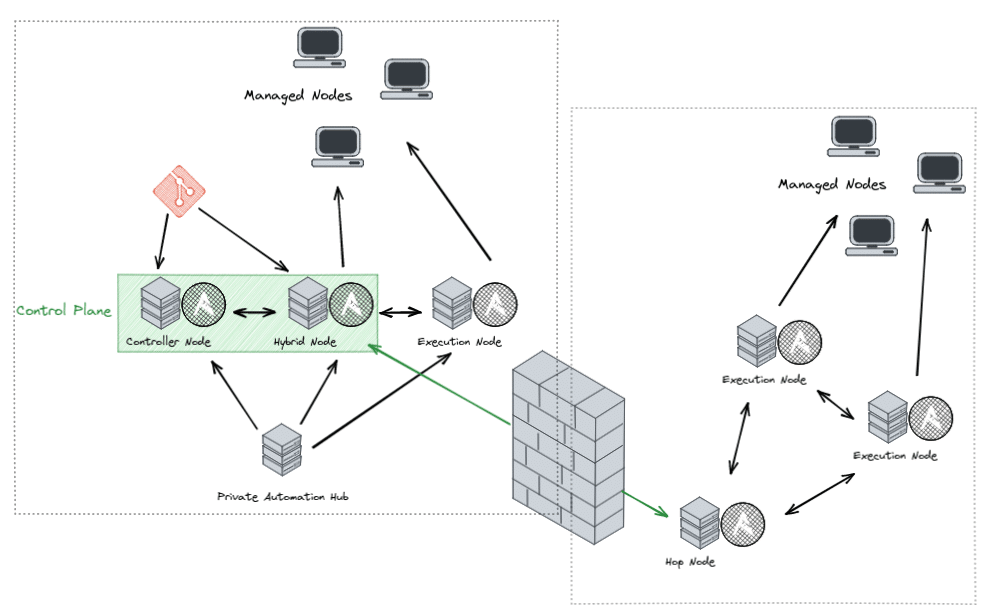

In this blog post, we will outline the basic architecture and functionality of the Ansible Automation Platform. Basically, the idea is always to split Ansible content and the executing layer as seen here:

The executing layer here is the Ansible Automation Controller. This is based on the Open-Source Project AWX.

AWX/Automation Controller

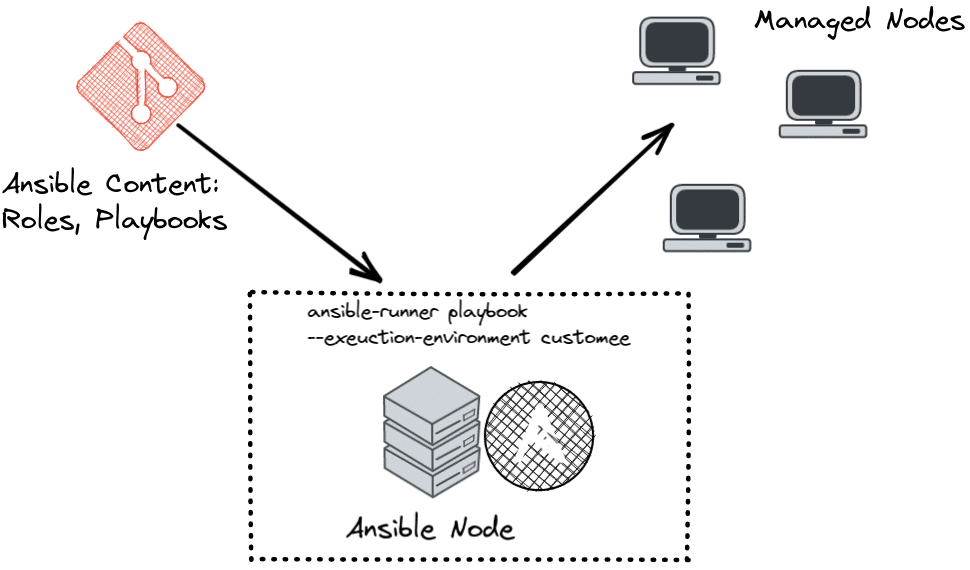

Basically, AWX and Automation Controller are identical, only the latter is the Enterprise compatible downstream variant. Internally, AWX initially uses two components: ansible-runner, as well as Ansible execution environments.

ansible-runner is an open source Python library that simplifies the automated execution of playbooks compared to the use of ansible-playbook. On the one hand, a process isolation and parallelization of playbook runs can be achieved, on the other hand, ansible-runner provides a much more usable output than the standard ansible-playbook call. Simply speaking, ansible-runner is nothing but a runtime execution for Ansible.

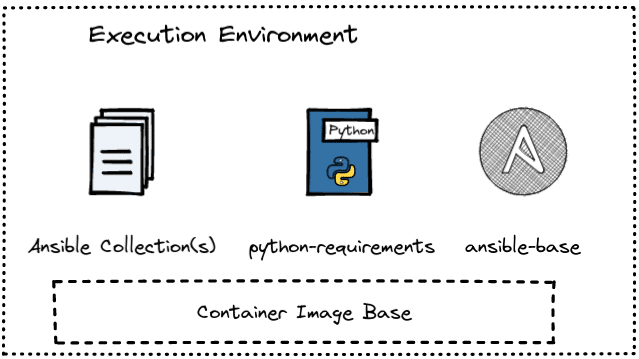

In order to execute Ansible jobs consistently regardless of their environment, there are also so-called Ansible Execution Environments: These are nothing more than OCI-compatible container images within which a playbook with all dependencies can be executed.

To do this, Ansible-Collections, python libraries and Ansible-Base are merged into one container. Ideally, the open source tool ansible-builder should be used. A final execution environment then has the following form:

Execution environments can then be used in AWX using ansible-runner.

Private Automation HUB aka Private Galaxy

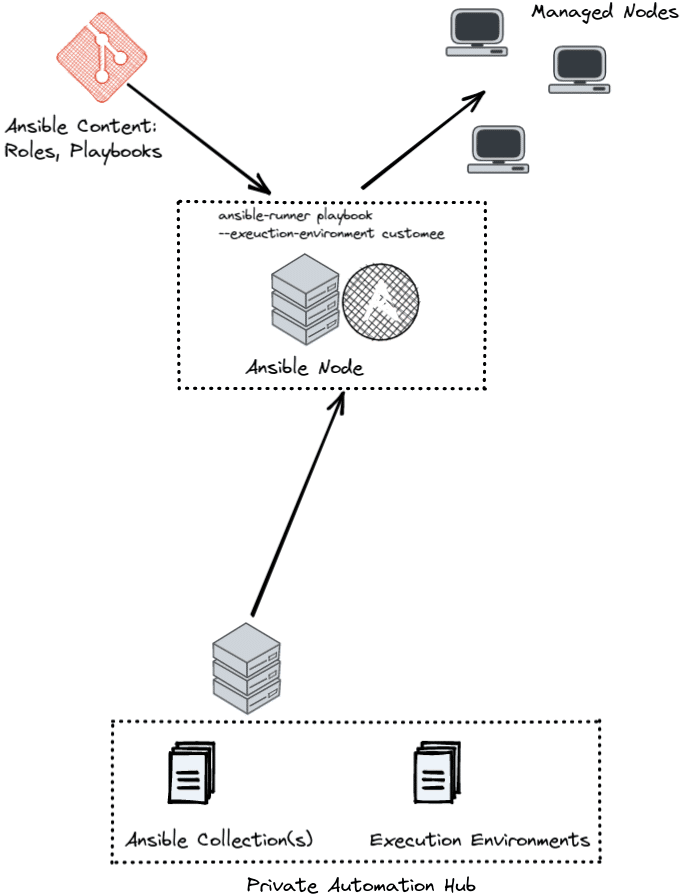

AWX comes with pre-built execution environments. However, if you want to create and use your own Execution Environments, the question arises as to where they should ideally be stored. In principle, any OCI-compatible container registry is initially suitable.

However, if you want to kill several birds with one stone, you can consider your own Galaxy installation. Every Ansible user stumbles across the official Ansible Galaxy at one point or another, as it is the central community hub for Ansible content of all kinds. The Ansible Automation Platform Enterprise product provides with Private Automation Hub the possibility to do an on-premises installation of Ansible Galaxy and thus manage your own content.

But also non-enterprise customers can benefit from such an on-premises installation. Private Automation Hub is also based on an open source project – in this case it is Pulp. You may already be familiar with Pulp as the backend component of Katello – in the orcharhino/Foreman/Satellite environment. You can find the installation procedure of the pure open source variant on GitHub or on YouTube.

Whether Enterprise Private Automation Hub or Open Source Galaxy, in the end the component can manage execution environments as well as its own Ansible Collections. AWX nodes can then pull this content when running playbooks.

Automation Mesh

AWX/Automation Controller can also be clustered to achieve high availability and higher parallelization. For this purpose, AWX introduces automation-mesh, which is based on the open source framework receptor.

This allows different nodes in an AWX cluster to take on different roles:

- Controller nodes form the so-called control plane. These nodes take care of all management tasks, such as distributing jobs or syncing Ansible content with the source, which is usually the Git repository.

- Execution Nodes can only execute Ansible jobs that have been assigned to them.

- Hybrid nodes are – as the name suggests – both part of the control plane and execution nodes.

If the Automation Platform installation spans multiple networks, for example across DMZ boundaries, hop nodes can also be deployed. These serve only as forwarders in the receptor network. As shown in the picture, it is then sufficient to allow only the traffic to the hop node in the firewall.

The modular design and the use of automation controllers allow a very flexible deployment. Whether purely containerized in Kubernetes/OpenShift, as virtual machines, bare-metal or a mixture, there are hardly any limits to creativity.

Feel free to contact us to discuss or design your own use case. We are looking forward to your inquiry!